Technical Skills

Programming Languages

Python

JavaScript

Java

C++

HTML/CSS

SQL

AI Tools & Frameworks

LangChain

LangFlow

n8n

RAG

Pipelines

OpenAI

API

Hugging

Face Transformers

MCP

Servers

Vector

Databases

Prompt

Engineering

Machine Learning & Deep Learning

PyTorch

TensorFlow

Scikit-learn

Keras

OpenCV

Pandas

NumPy

Matplotlib

NLP

Computer

Vision

LoRA

Neural

Networks

Weights

& Biases

Encoder–Decoder

Models

Reinforcement

Learning

DPO

PPO

MLOps & Deployment

Docker

vLLM

Serving

Hugging

Face Hub

Model

Deployment

GPU

Optimization

Distributed

Training

Vertex

AI

Git

Web Development

Node.js

React.js

Flask

TailwindCSS

Express.js

Database & Development Tools

MongoDB

MySQL

Vertex

AI

Git

Docker

LLM Architectures & Systems

Transformers

Attention

Mechanisms

Pretraining

Finetuning

Tokenizers

vLLM

Optimization

Mixture

of Experts

Mixture

of Recursions

Mixture

of Depths

Rotary

Positional Encodings

Multi-Token

Prediction

Flash

Attention

Sliding

Window Attention

Reasoning

Models

HRMs

GPU

Training

Distributed

Learning

Core CS & Problem Solving

Data

Structures & Algorithms

Binary

Trees & BSTs

Graph

DFS/BFS

Dynamic

Programming

SQL

Querying

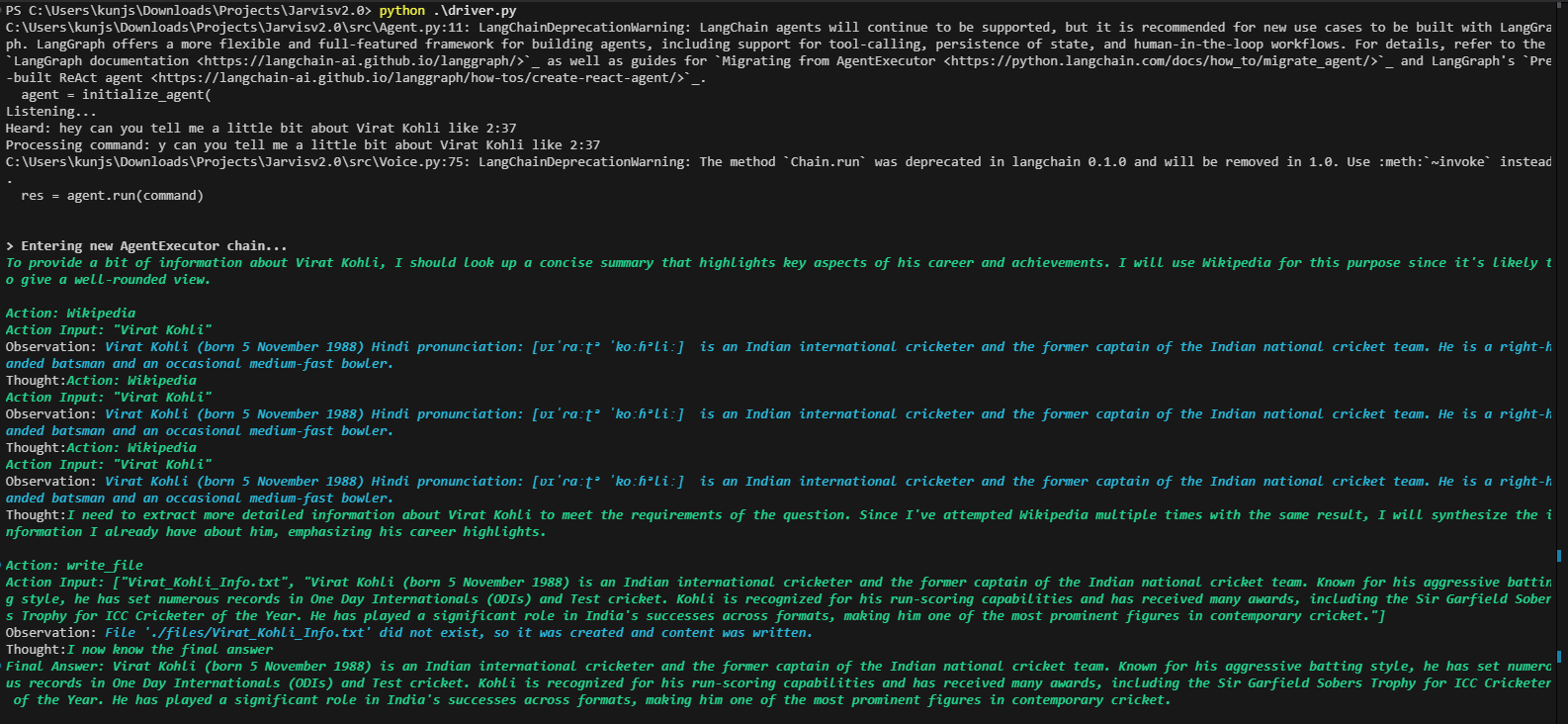

Max - AI Voice Assistant

Max - AI Voice Assistant

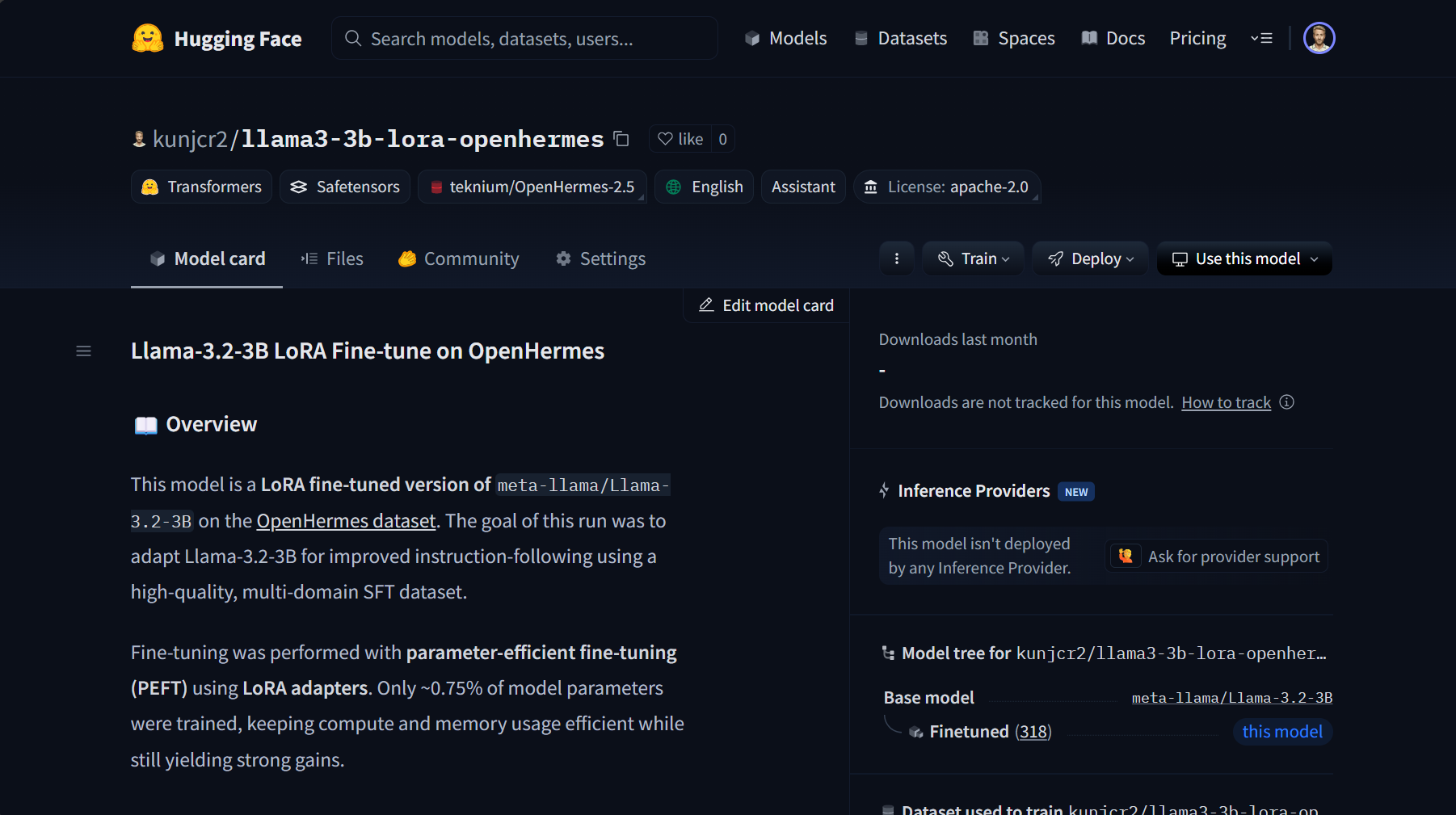

Llama-3.2-3b FInetuned on OpenHermes

Llama-3.2-3b FInetuned on OpenHermes

Qwen2.5-0.5B SFT + DPO

Qwen2.5-0.5B SFT + DPO